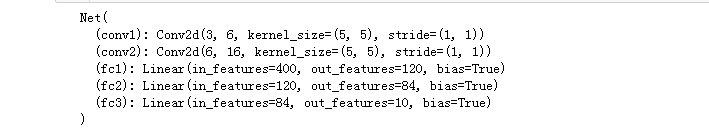

#LeNet 定义结构方式1 事实上LeNet被提出时,并没有用到最大池化与ReLU激活函数

import torch

import torch.nn as nn

from torch import functional as F

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

self.conv1=nn.Conv2d(3,6,5)

self.conv2=nn.Conv2d(6,16,5)

self.fc1=nn.Linear(16*5*5,120)

self.fc2=nn.Linear(120,84)

self.fc3=nn.Linear(84,10)

def forward(self,x):

x=F.max_pool2d(F.relu(self.conv1(x)),(2,2))

x=F.max_pool2d(F.relu(self.conv2(x)),2)

x=x.view(x.size()[0],-1)

x=F.relu(self.fc1(x))

x=F.relu(self.fc2(x))

x=self.fc3(x)

return x

net=Net()

print(net)

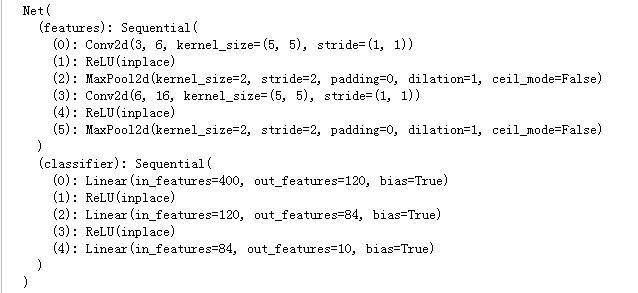

#LeNet 定义结构方式2 事实上LeNet被提出时,并没有用到最大池化与ReLU激活函数

import torch

import torch.nn as nn

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

self.features=nn.Sequential(

nn.Conv2d(3,6,5),

nn.ReLU(),

nn.MaxPool2d(2,2),

nn.Conv2d(6,16,5),

nn.ReLU(),

nn.MaxPool2d(2,2)

)

self.classifier=nn.Sequential(

nn.Linear(400,120),

nn.ReLU(),

nn.Linear(120,84),

nn.ReLU(),

nn.Linear(84,10)

)

def forward(self,x):

x=self.features(x)

x=x.view(x.size()[0],-1)

x=self.classifier(x)

return x

net=Net()

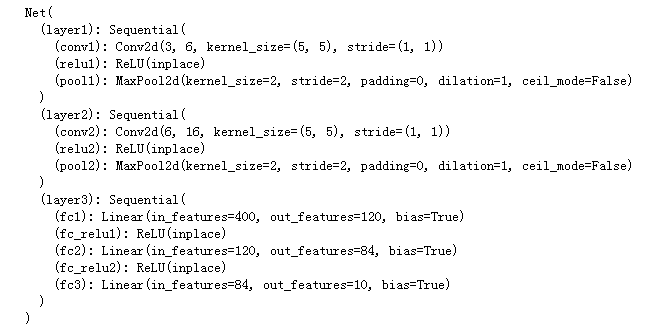

print(net)#LeNet 定义结构方式3 事实上LeNet被提出时,并没有用到最大池化与ReLU激活函数

import torch

import torch.nn as nn

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

layer1=nn.Sequential()

layer1.add_module('conv1',nn.Conv2d(3,6,5))

layer1.add_module('relu1',nn.ReLU(True))

layer1.add_module('pool1',nn.MaxPool2d(2,2))

self.layer1=layer1

layer2=nn.Sequential()

layer2.add_module('conv2',nn.Conv2d(6,16,5))

layer2.add_module('relu2',nn.ReLU(True))

layer2.add_module('pool2',nn.MaxPool2d(2,2))

self.layer2=layer2

layer3=nn.Sequential()

layer3.add_module('fc1',nn.Linear(400,120))

layer3.add_module('fc_relu1',nn.ReLU(True))

layer3.add_module('fc2',nn.Linear(120,84))

layer3.add_module('fc_relu2',nn.ReLU(True))

layer3.add_module('fc3',nn.Linear(84,10))

self.layer3=layer3

def forward(self,x):

conv1=self.layer1(x)

conv2=self.layer2(conv1)

fc_input=conv2.view(conv2.size(0),-1)

fc_out=self.layer3(fc_input)

return fc_out

net=Net()

print(net)编译环境为Jupyter,运行结果可见:文章源自联网快讯-https://x1995.cn/3244.html

文章源自联网快讯-https://x1995.cn/3244.html

文章源自联网快讯-https://x1995.cn/3244.html

文章源自联网快讯-https://x1995.cn/3244.html

文章源自联网快讯-https://x1995.cn/3244.html

文章源自联网快讯-https://x1995.cn/3244.html

文章源自联网快讯-https://x1995.cn/3244.html

文章源自联网快讯-https://x1995.cn/3244.html

三种搭建方式都用在了CIFAR-10数据集上,迭代训练了10次,最后的准确度都基本差不多,个人喜欢第二种搭建方式。文章源自联网快讯-https://x1995.cn/3244.html

继续阅读

评论